Overview

Output Kernel Learning (OKL) is a kernel-based technology to solve learning problems with multiple outputs,

such as multi-class and multi-label classification or vectorial regression while automatically

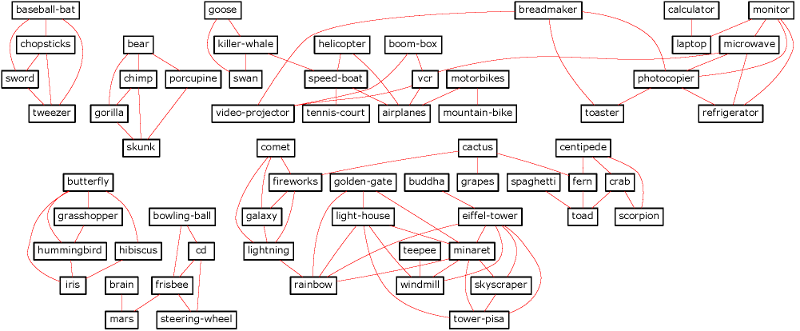

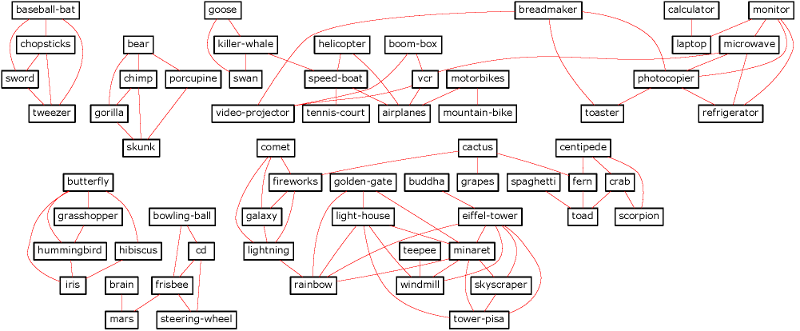

learning the relationships between the different output components. For instance, the following

graph represents the learned similarities between a subset of the classes for the popular

Caltech 256 dataset.

The graph has been obtained by jointly learning a kernel-based classifier and a similarity matrix between the classes.

References

F. Dinuzzo, C. S. Ong, P. Gehler, and G. Pillonetto. "Learning Output Kernels with Block Coordinate Descent." Proceedings of the International Conference on Machine Learning, 2011.

F. Dinuzzo, and K. Fukumizu. Learning low-rank output kernels. JMLR: Workshop and Conference Proceedings. Proceedings of the 3rd Asian Conference on Machine Learning. 2011.

Code for OKL

MATLAB code available here. The package contains the following files:

- okl.m (Output kernel learning - training)

- lrokl.m (Low-rank output kernel learning - training)

- test.m (Example of test file)

- data/synthdata.mat (Synthetic data for test.m)

- gpl.txt (GNU license)

Experiments in the paper

The original experiments of (Dinuzzo et. al, ICML 2011) were implemented in Python, using the software OptWok.

The code to reproduce the results in the paper is available here. The original datasets can be retrieved at the following links:

- toy data(625K)

- USPS(3.7M) Copied from Antoine Bordes

- Caltech 101 and 256 is available via mldata.org.